Multi-modal day and night semantic segmentation of objects using label transfer

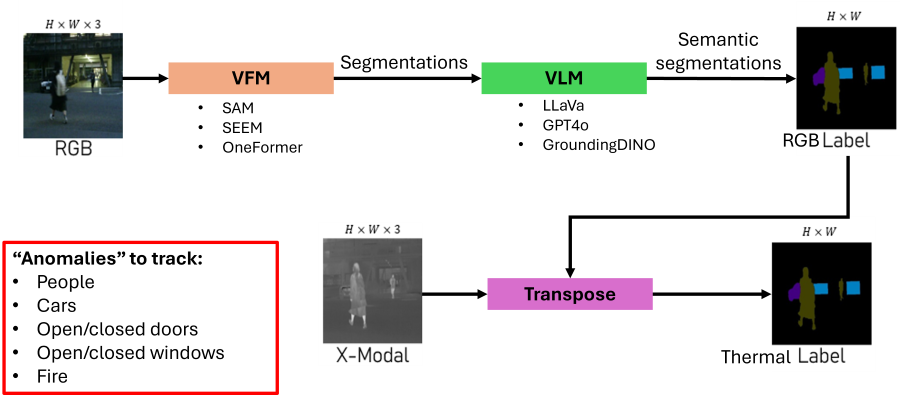

RGB cameras are inexpensive, lightweight sensors that provide information-dense data for various tasks, such as localisation, 3D reconstruction, and scene understanding. Supervised training of scene understanding models, i.e. semantic segmentation models, is a labour-intensive process which requires a human to annotate every individual pixel of each image. To automate the annotation process, state-of-the-art segmentation models rely on automated labelling by combining Vision Foundation Models (VFMs) with Vision Language Models (VLMs). A VFM like SAM first performs a base segmentation by grouping pixels belonging to the same object together. Each segmented object is then provided to a VLM like LLaVA or GPT4, which attaches a text label to it. This requires limited human effort for annotating images, but is very computationally expensive. It can therefore not be used for real-time inference on embedded devices. A smaller network is therefore trained to imitate the segmentation task at faster inference speeds on embedded systems.

The performance of RGB cameras degrade in adverse conditions such as at night or in the presence of smoke. In these situations Long-wave infrared (thermal) cameras are a good alternative as they are unaffected by lighting conditions or aerial particles. Research into semantic segmentation using thermal images is however limited due to 1. lack of annotated data and 2. thermal images often have low contrast, making it harder to distinguish boundaries of objects. But opportunities to research thermal segmentation methods have increased in recent years with the technological improvements of new thermal cameras and the release of more advanced computer vision techniques to enhance the contrast of the thermal images.

The Smart Mechatronics And Robotics (SMART) research group at Saxion University of Applied Sciences is researching thermal semantic segmentation for different applications where RGB cameras do not work such as a security drone at night and a firefighting robot at an indoor fire incident.

During his/her MSc thesis, the student will investigate the following:

Training a segmentation network on RGB images to segment persons, cars, doors and windows in indoor terrestrial as well as outdoor aerial datasets. The student may need to create annotated images to generate sufficient training data. Transferring labels in RGB images to thermal images for thermal annotation.

Training a segmentation network on thermal images to segment persons, cars, doors and windows in indoor terrestrial as well as outdoor aerial datasets. Comparing the performance of the RGB and thermal segmentation networks in different lighting conditions.

This MSc thesis is a joint collaboration between UT-ITC and Saxion-SMART. The student will therefore spend part of their thesis period at Saxion where the required hardware and testing facilities will be available. At the end of their thesis, the student should generate the following deliverables: The MSc thesis describing the student’s problem description, methodology, results and conclusion.

As an internship in Saxion:

This assignment can also be scoped as a 14-16 week internship, focusing on the transfer of labels

from RGB to thermal in depth scenes.

In this case, please contact: Benjamin van Manen - b.r.vanmanen@utwente.nl